Table of Contents

1 Compiling Juvix to Michelson

Juvix Is a dependently typed programming language inspired by Idris,

F★, and Coq. Juvix is designed as a smart contract verification and

execution language, where efficient compilation is key, as otherwise

any inefficient compilation to the primitives of a virtual machine on

a decentralised blockchain will result in extra funds spent in order

to execute any given program (for more background on smart contract

language design, see The Why of Juvix).

Juvix targets Tezos through

its primitive script known as Michelson. Michelson is a peculiar

language in that it is a typed stack based language in the lineage of

Forth and Kitten. However, unlike these languages, Michelson lacks

primitives to make standalone named functions.

Before going forward, we will need to gain some intuition on how stack based languages operate.

1.1 Intuition on Stack Based Languages

In a stack based language, function arguments are not named, instead

arguments are taken off of the execution stack, and functions return

their result to this same stack.

The first example we shall look at is the square function

: square dup * ;

:means define a new word, in this casesquare.;signals the end of the definition.

- the

dupfunction takes the item on top of the stack then duplicates it. *multiplication operator, as one expects.

We shall now trace the execution of the above program with 3.

3 square

- Step 1

- Reading:

3 - Stack: [ 3 ]

- Reading:

- Step 2

- Reading:

Square:dup. - Stack: [ 3 3 ]

- Reading:

- Step 3

- Reading:

Square:*. - Stack: [ 9 ]

- Reading:

What is interesting about this program is that every time we need to

use a variable more than once, we need to call dup before consuming

it. Thus stack based languages have similarities to Linear Logic. This

detail will be expanded upon in the next section.

Other important functions to keep in mind for stack machines are the stack shuffling operations

swap∷ [ 2 3 ] ⟶ [ 3 2 ]rot∷ [ 2 3 4 ] ⟶ [ 3 4 2 ]

Michelson in particular has functions which can take other

functions. The important one to remember is dip n which protects the

top n-1 items of the stack while executing on the nth item and

below.

With this out of the way, we can finally talk about how to compile effectively to Michelson.

1.2 Optimizations to Consider

Any standard language compiling to a stack machine must consider how

to effectively translate their variable semantics into efficient stack

shuffling. Since every operation in Michelson has a cost, doing this

efficiently is imperative.

1.2.1 Optimizations Relating to Efficient Stack Placement

Minimizing the number of

dupsAn illustrative example is the following

let f x = x

Since Michelson lambdas have a high cost and it is quite limited in the forms it accepts, we opt to beta expand most lambdas away. Juvix is in a unique position due to part of our type theory known as Quantitative Type Theory (QTT), which tracks the usage of all variables. Under a normal compiler pipeline this would mean that most structures can avoid being in garbage collection, however for Michelson this means we can eliminate a single

dupanddig drop. Thus, if were to compile the application offwithin some nested computation where thexis stored five slots back in stack, then without QTT or some notion of linearity then the expansion would look like the following.5 dug dup 6 dig

Indeed, there would be no way of knowing that it's safe to completely move away. However, if we were to consider Quantitative Type Theory, with

xonly being used once then we can always safely write5 dugPlacement of variables after lookup

A more generic optimization is looking up a variable from the environment, which effectively caches the value on the stack until it is consumed.

Let us consider the functionfbelow where the usage ofxis 3. Let us consider the application wherexis 5 slots away.let f = x * x

Then the generated code would be.

5 dug dup 6 dig dup *

Thus we can see that we did not have to do another

dug digcombo, but insteaddupthe current variable.This technique relies on propagating usages effectively, as we have to know whether to

dupa value or just move it to be consumed. As currently implemented, there is one restriction that will make this behavior less optimal than it should be. These inefficiencies will be discussed in the next section.

1.2.2 Optimizations Relating to Curry Semantics

For those unfamiliar with currying, in an ML family language,

functions only take a single argument.

f a b c = times (a + b + c) g = f 2 3 h = g 5 6 l = f 2 3 5 6 j = f 2 3 4

Although it may look like each function takes multiple arguments,

notice how this example works. f explicitly takes 3 arguments up

front (named a b and c), but in reality takes a fourth unarmed

argument. As application goes we show sending in 2 arguments to f

twice, showing that you can partially apply f and get back a new

function that takes two more arguments, where h finally makes it

whole. We also can see that we can send in more arguments than there

are names for and also the exact number of arguments. When compiling

down to a language without curry semantics, it's quite important to

get this optimization right.

- Push enter and eval apply

Historically there have been two techniques in literature for making currying fast. Strict languages likeMLandLispsopted to go for a technique calledeval/apply, while traditionally lazy languages likeHaskellwent forpush/enter. However, both lazy languages and strict languages found thatpush/enteris more complex thaneval/apply.

With that said, forMichelsonwe have opted for a hybrid approach.

The rationale for this mix is the following:

Michelson has no concept of a heap

The paper linked under, lazy languages, above, demonstrates that both

push/enterandeval/applyoperates under an abstract machine with three components. These components arethe code,the stack, andthe heap. For Michelson, we clearly havethe codeandthe stack(the code is the expression being compiled, and the stack is the result stack).The heaphowever has to be simulated withthe stack.The heapis responsible for the quick storage of all variables and closures used in any expression. Since all we have is a stack, these variables must be stored there, which means that if we have to retrieve a variable that is further back, one has to pay gas for this retrieval.Michelson is a stack machine with lambda

- Another deciding factor, is that Michelson is a stack

machine, specifically with an inefficient lambda

operation. This operation is inefficient in that all

Michelsonfunctions take a single argument, they can't for example consume multiple items off the stack. To simulate multiple arguments, one has to tuple them up before sending it in, then un-tuple them once in the lambda. To get a good intuition on the matter, let us consider a lambda takingnarguments: - Before we construct the Michelson lambda, we must tuple

them all. This costs

n-1pairs - Inside the lambda we constructed we have to un-pair the

argument to use the

narguments. This is not as simple as calling un-pairn-1times. Instead we have to write

dup; car; dip { cdr .... }

the

cargets the first element of the pair, andcdrget the rest of the pair. We have to calldip, as we want thecaras is, but to move past it to unpair the other elements.This process goes on for another

n-2times.

As we can see, the cost of lambda has a high fixed cost, so in our compilation we make sure to build up no intermediate lambdas and inline and beta expand as aggressively as possible, leaving those that another

Michelsonprimitive (such as map) requires one.

In our actual implementation when we come across an exact application: we just inline it immediately (this corresponds to exact ineval/apply). If we come across a partially applied function, we name the arguments on the stack for future reference, and push a virtual (thus it has no cost to lookup!) closure on the stack that can be later fully applied. For over application, we note the arguments which can't be named, we generate names from them and carry them over as extra arguments to the result of the applied function.- Another deciding factor, is that Michelson is a stack

machine, specifically with an inefficient lambda

operation. This operation is inefficient in that all

With these optimizations, curry semantics for

Juvixposes no overhead for generatingMichelson.

1.2.3 Various Other Optimizations

Lambda Removal

As discussed above, Lambdas are expensive, so we do beta reduction and inlining aggressively to avoid them. This beta reduction does not move the values on the stack at all, instead just naming them for when they are actually used.

Beta reduction in Michelson turn out to be safe due to the limitations of what can be expressed inMichelson. Firstly,Michelsondoesn't allow for custom types, instead providing their own data structures. So we currently can't send in any recursive structures, such as a list. Secondly,Michelsonlacks functions and the typing to support converting recursive functions into CPS style (Michelsononly has first-order typing). Thus all simple tail recursive functions will have to be translated into aMichelsonloop before being sent into the backend.Virtual stack

This was also discussed above, we will just note items stored on the virtual stack that is not stored on the

Michelsonstack:- Partially-applied functions

Constants

Specifically, these are propagated even through primitive functions if all the arguments are constants!

1.3 Limitations of our Current Compiler Pipeline

Currently our compiler is under the phase of rapid development,

so many of the phases are quite immature. Namely, we lack a

normalization step for our Core intermediate representation (IR). We

plan on adding an Administrative Normal Form (ANF) transformation soon

that would open up many optimizations while generalizing the

Michelson backend logic (we would get constant propagation, and a

proper generalized eval/apply model).

Below we shall list known optimizations that can easily happen given a proper ANF form.

1.3.1 Improvements from Normalization

More Effective usage movement

Currently, when we move a variable forward, we move all usages except 1 forward with it unless the variable only has one usage left. This means, we always keep behind a copy of the variable behind.

Let us see the example we used before.let f = x * x

Let us suppose

x, like before, is five positions back, however now consider the usage of x being used twice and thrice.

Twice:5 dug dup 6 dig 6 dug *

thrice (as before):

5 dug dup 6 dig dup *

As we can see, if we only use

xtwice we pay a cost of digging five again to move the copy over. The firstxwe moved over has a usage one where we are going to use it right away, so this usage is saved. Thus the only freexis the one six stack positions away!

A smarter plan would be to just move all the usages up, and if any usages are left over after using them, move them right before the consumption point.

So to illustrate, consider the same example from above:

Twice:5 dug dup *Thrice (as before):

5 dug dup dup 3 dip *

Notice this is much more efficient, as we don't have to go six positions back immediately, and when we do have to leave a copy behind it is only the minimum number three slots away.

However this optimization is not safe currently. Consider the example below:f a (x + y) b

If we were to do this technique with

xandyhaving extra usages, then moving them back would causebto no longer be an argument, instead replacing it with themselves!

Once ANF form is up, the computation will instead be like thislet xy0 = x + y in f a xy0 b

where every function takes primitives, so these moves would have happened before, thus allowing this optimization to take place.

-

Another benefit of ANF is that with the extension of join points, we can effectively fuse (merge) independent loops into a single loop when they are composed together. This optimization will only show on operations through numbers sadly, as recursive structures in

Michelsonthat are not primitive are not permitted.

1.4 Final Thoughts

Overall, Juvix's Michelson backend is efficient while allowing for

high level idioms. The costs for currying are gone with the usage of

smart and well studied techniques. Usage tracking and inference allows

for the programmers to write code without worrying about calling dup

or drop by hand. Further, our aggressive inlining of lambdas allow

abstractions used for readability of code to have no performance

penalties. Many more optimizations are coming that should allow for

even faster generated Michelson. In future articles, there will be

example smart contracts written in Juvix, in order to showcase that

Juvix' Michelson backend can not only make your smart contracts on

Tezos faster, but also safer.

Written by Jeremy Ornelas, core developer and researcher at Metastate.

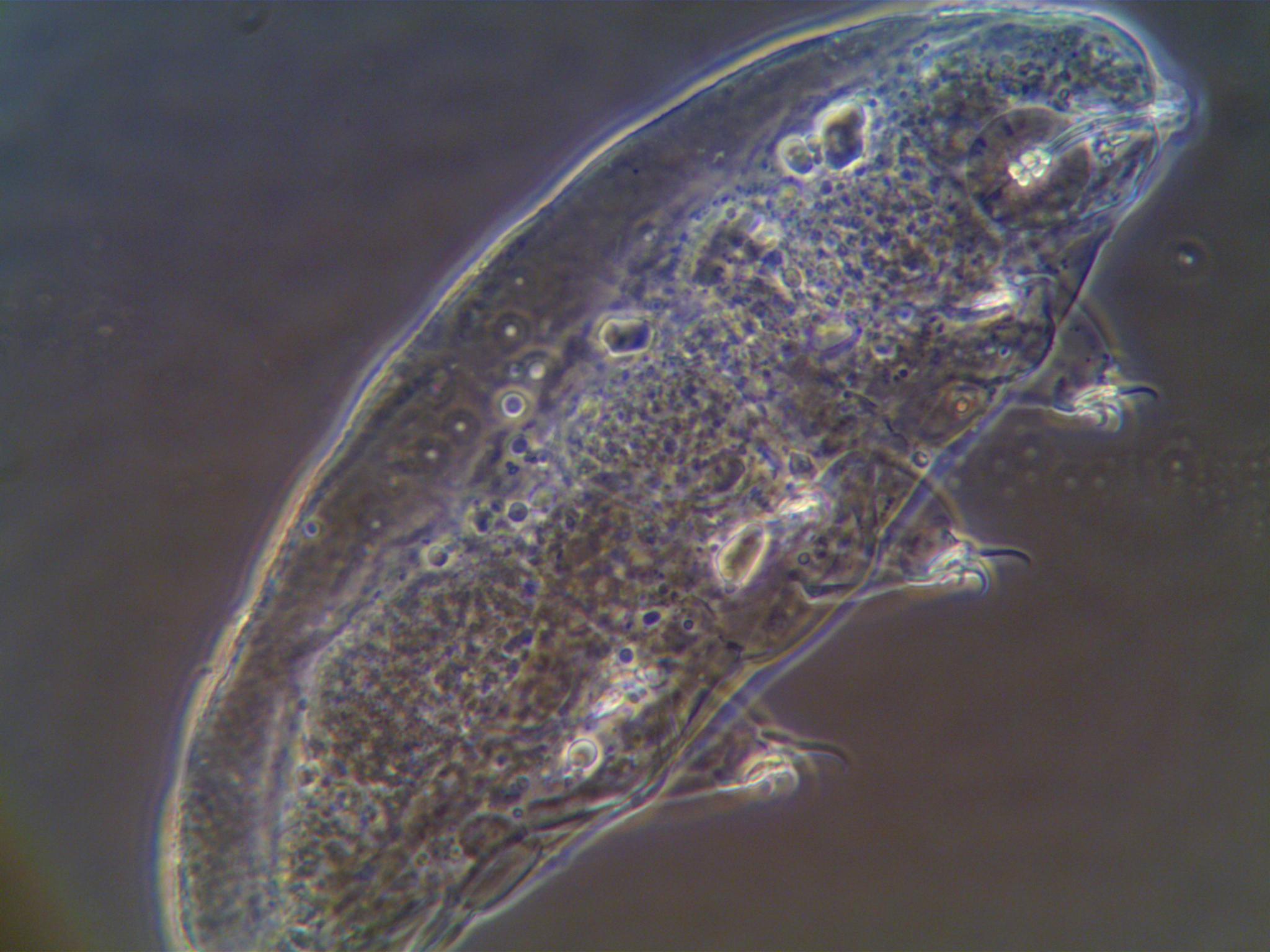

Image Credits: Water Bears from Encyclopedia of Life.

Metastate has ceased its activities on the 31st of January 2021. The team members have joined a new company and working on a new project. If you're interested in programming language theory, functional programming, type theory, formal verification, or compiler engineering positions, check out the open positions at Heliax.

If you have any feedback or questions on this article, please do not hesitate to contact us: hello@heliax.dev.